How might we...

How might we improve the experience of individuals with mobility challenges in NYC?

How might we create a solution that BLV people better navigate in an indoor space?

How might we take the stress out of pre-planning for BLV people?

How might we create a technology that uses sensory inputs and tactile feedback to deliver navigation information?

How might we better inform sighted passersby of a disability? How might we raise awareness for BLV community as a whole?

Discovery

We conducted research by interviewing 43+ BLV users and 20+ subject matter experts, including people from Verizon’s 5G innovation Lab, Verizon’s accessibility group, and disability volunteer coordinators. We conducted empathy experiments to increase our empathy for the community

Early Findings

- People who are congenitally blind (blind from birth) and those who have been dealing with vision impairment for a long time are more in tune with their other senses than sighted individuals.

- People who are visually impaired intensively plan their trips — both short- and long-term — to ease navigation and avoid obstacles that prohibit them from getting around.

- Sight canes and other wearable products (e.g. glasses) are essential tools for the BLV community, however, they have also evolved into symbols that signal one’s impairment to a pedestrian.

- Current way-finding technologies do a decent job of getting a user from one address to another but leave them stranded once they pass through the door. That “last mile,” such as finding a specific room, aisle, or product, poses major difficulties for a blind or low-vision person.

Diving Deeper

To develop our ideas, we purposefully chose to co-create with our users. We hosted a workshop at a local library with eleven BLV users. As empathetic as we tried to be, we knew we could never truly know what it was like to be our users. We asked our participants to focus on the following problem:

How might we improve your daily commute?

findings

Using clay and legos, we built solutions and shared them with the group. A few of the "winning" solutions were:

- Responsive sidewalks that vibrate to let pedestrians know to get out of the way

- A wand that can detect and describe different types of light to a user

- Haptic shoes

Incorporating our findings, we knew we needed haptic feedback to direct BLV users. We finally decided on a haptic feedback pad that could be easily worn anywhere on the body.

Testing and Validation

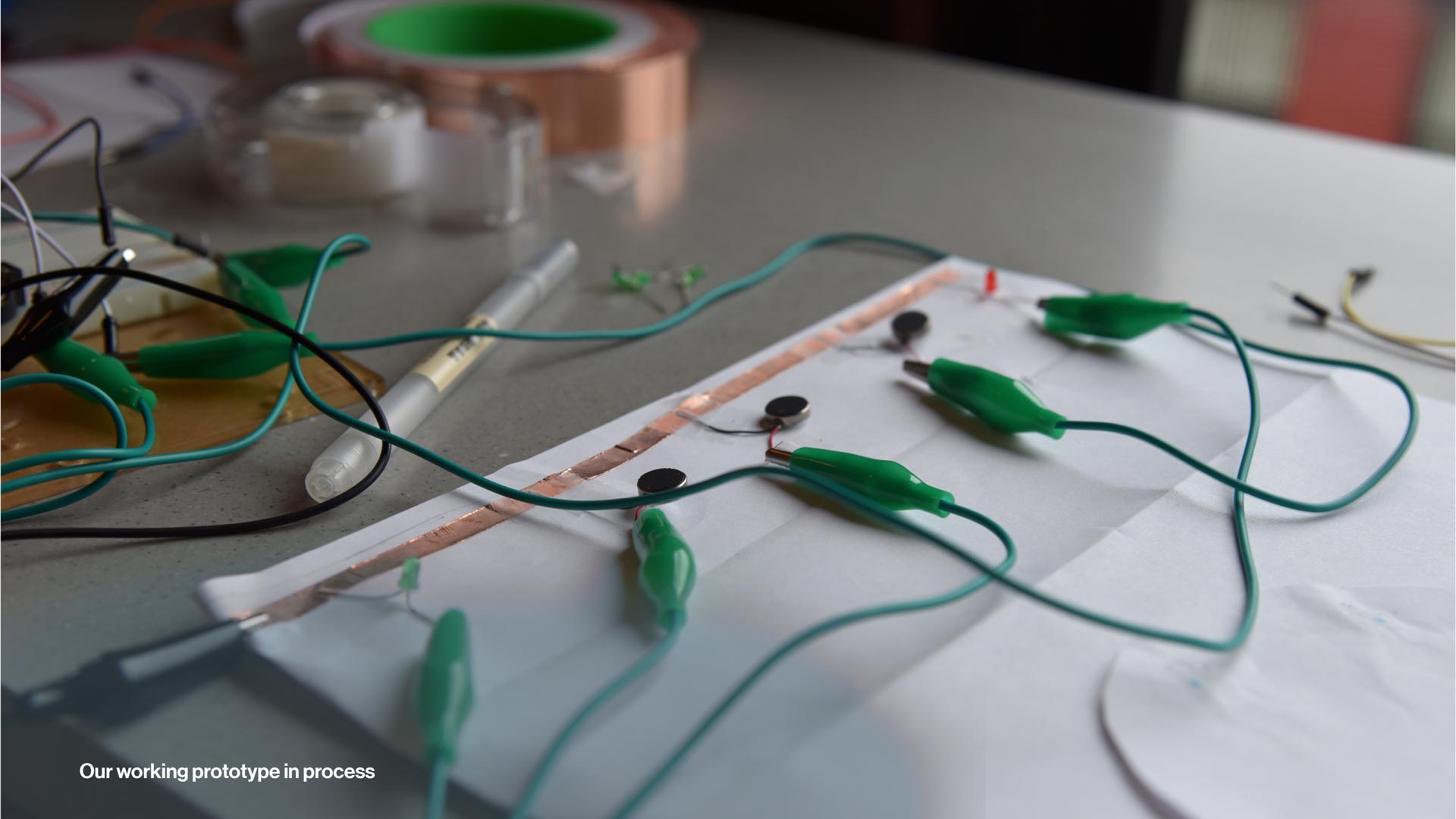

To test our whether our idea was viable, we created a series of low fidelity Arduino prototypes. Darshan and I led the team in the Arduino development by guiding and educating other members of the team on how to use simple wiring and circuitry to create haptic feedback in our prototype. We tested the pad on various users and found the following insights:

new insights

- The preferred location for the pad was the shoulders because it was the easiest for users to receive feedback when navigating.

- A series of pulses was a good indicator for a user to continue going straight, and a faster-paced pulse would tell the user to stop.

- The pad would ultimately help direct BLV users in their daily commute.

The Solution

Prior to venturing out of the house, people with vision impairments have to extensively pre-plan, which stifles spontaneity. On the way, they could encounter obstacles in their path—like major train delays or road construction—that derail their plans. These types of problems are typically easy for a sighted person to deal with, but for those with a visual impairment, they require immediate, up-to-date information that provides options for re-routing. The “last foot” in navigation is a serious challenge. For example, getting to a subway station is possible, but finding the correct platform proves difficult, especially if trains change.

As a result, we created Thea, a concept that offers a comprehensive solution to help the visually impaired independently and safely explore their environments.

Natural and conversational UI

Thea is a system that is easy to use and understand. It responds to requests like a real person, adapts to voice inputs, and provides an unparalleled navigation experience.

Intuitive haptic feedback

Visually-impaired individuals rely heavily on their sense of hearing so, in high-congestion and noisy areas, Thea switches from audio to haptic feedback. Thea conveys directional information in an intuitive way—its haptic “language” orients users and provides complex directional information.

Stretchy, wearable pad

Thea is a system that is easy to use and understand. It responds to requests like a real person, adapts to voice inputs, and provides an unparalleled navigation experience.